In this article we will show how we build Docker application containers in two steps and explain why that is a useful thing to do. We then walk you through writing a build script that not only builds an application container but also runs its unit tests, checks with the source repository for conflicts or unchecked changes and tags and pushes the final image to the container repository. Finally, we expand that simple build script to one that is more modular and open for extension.

The article uses a Node.js application as an example, but these techniques are also applicable to building Ruby, Python and Go application containers.

The problem with the single build Dockerfile

Packaging applications in Docker containers is part of the day-to-day continuous deployment workflow. We realised that there is a downside to building application containers in the way that the official-Docker-platform ruby and node image documentation suggests. The method illustrated in the readme of those images is as simple as a two line Dockerfile reading:

FROM node:6-onbuild

EXPOSE 3000

The onbuild hooks will copy the current directory to the source directory in the container and run the npm install during packaging; resulting in a neat, ready to use container.

An experienced Docker user may have spotted the problem already. Copying the source of your application invalidates the cache of any subsequent actions, such as the npm install, bundle install or any other build steps you might have defined. This means those actions have to be repeated every time docker build is invoked on new code. This can quickly become cumbersome in a continuous deployment environment, especially for larger applications with many dependencies.

Splitting the container build process into two steps

The solution we have applied to reduce the container build time is to split the process into two steps and introduce a small bash script that executes those steps for us.

The first step is to have a Dockerfile that specifies the build environment for the application, such as the node container with various build steps(gulp, coffeescript, uglifying, etc..). Instead of copying the source directory to that container during build, we mount the source and output directory to it and run a build script inside the container. The build container is stateless, all build products end up in the mounted output folder, and the build script can reuse any cached build products there.

The second step is to have a Dockerfile that specifies the runtime environment of the application, which could be a clean node container, or if the result is the frontend of a single-page-app even just the clean nginx container. We add the build product to this container with no additional actions. It might look like this:

FROM node

ADD . /usr/src/app

WORKDIR /usr/src/app

CMD node app.js

EXPOSE 3000

To execute the two build steps we add a small script called docker.sh to our application’s root folder that looks like this:

set -e # exit immediately on error

docker run -it --rm -v "$PWD":/usr/src/app -w /usr/src/app node:6 npm install

docker build -t my-org/my-nodejs-app .

When we run the script, the image will be assembled quickly, and it will be ready to be shipped to the CI system and deployed to production.

Slimming down the image

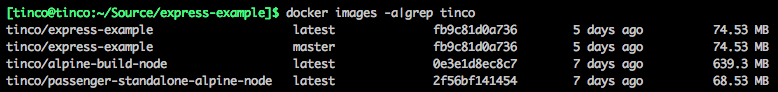

Since the image used for building the application and the image the runtime image is built upon can be different we can make a significant improvement in the size of our runtime image by removing all build dependencies from it. To illustrate we built two alpine-based Node.js images.

The first has the build dependencies and is tagged as tinco/alpine-build-node. This one is quite large, it not only has installed all dependencies, it also foregoes cleaning up its caches to make subsequent build steps quicker. Despite it being based off the Alpine image it is around the same size as the official Debian based Node image.

The runtime image is tagged as tinco/passenger-standalone-alpine-node. It is super slim, weighing in at 69MB, just over a tenth of the official node image. Adding the application to it increases its size by just a few megabytes.

This is what this slimmed down runtime image Dockerfile looks like for our app:

FROM tinco/passenger-standalone-alpine-node:latest

ADD . /usr/src/app

RUN chown -R app:app /usr/src/app

The alpine-based image we're building our app on here is a prerelease version of an official slimmed down Passenger image. If you would like to know more about that keep a close eye on this blog. The third line fixes the ownership of the files inside the application, this improves security as in Docker the files would, by default, be owned by root.

In the next section we will discuss some other features we have added to the build script to make life easier for our developers.

Automating testing, checking, tagging and pushing of containers

The docker.sh script we made is used to build a production ready image, a critical step before deployment. It would be convenient if it would include more of the steps a developer should take before pushing a new release to production.

We have identified the following steps needed to get a container to production:

- Run a test suite

- Check version control

- Tag release

- Push to hub

In this article we will stick to these basic steps, and implement the bare minimum as an example; in your own script you can make these steps as elaborate as your workflow requires.

Testing the Docker container

We ship our test suites inside the production containers. This way we can run the tests in the exact environment that will run in production. To run the suite in the container, we simply override the container’s CMD command to be the test runner instead of starting the application. If the test suite depends on an external service like a database server you can use --link to link it in.

export TEST_COMMAND="./node_modules/mocha/bin/mocha"

docker run -it --entrypoint /bin/sh my-org/my-nodejs-app -c $TEST_COMMAND

First we defined the test command, in this case we're using mocha located in the node_modules directory. The -it tells Docker to run this command with an interactive terminal attached, which allows us to interact with the container as if it was any regular process started from the command line. The entrypoint is reset to /bin/sh because in a production container it would otherwise start the application instead of our test. Following the entrypoint override we have the image name from the docker build -t step and then the command prefixed with -c, which is what /bin/sh expects to run a script.

Checking version control

After some months of development using our Docker workflow we realized it often happened that someone would build and tag a release from a personal or master branch and push it as a production container, forgetting to sync it to the staging and production branches. When the version control branches or tags do not match up to container releases it becomes hard to reason about what is currently running and what to roll back to if there is a problem. Once again docker.sh presents itself as a convenient location to enforce some processes for the developer.

if [[ `git status --porcelain` ]]; then

echo "Current working directory is dirty, run 'git status' for details."

exit 1

fi

if [[ `git branch --no-color|grep "*"|awk '{ print $2 }'` != $1 ]]; then

echo "Current branch is not $TAG, checkout and merge that branch before releasing."

exit 1

fi

Here we simply check the output of the git status --porcelain command and print a message if the command outputs anything. This will help prevent developers forgetting to add a change when pushing.

We then check the argument to the docker.sh invocation for a branch name, and match that to the current branch. If they don't match the command prints a message and aborts. This helps prevent a situation where a developer accidentally pushes an unstable development branch to production.

Pushing the image

The final step is simple, just tag the release and push it to the Docker repository. You can push to your own private repository, in this example we will just push to the Docker hub:

git push

docker tag $IMAGE_NAME $IMAGE_NAME:$1

docker push $IMAGE_NAME:$1

Before we push to the hub we first push to git. We would not want to have a docker container running in production whose source code is not checked in to a repository, debugging would become a nightmare. Also, if the git push fails we will know that there are changes upstream we have failed to merge and our code is not up to date.

After that succeeds we attach the release tag to the container and subsequently push it to the hub.

An example invocation of this script:

git checkout -b production

./docker.sh production

Finishing up the script

The script is functional so far, but not very extensible; and it does not cover every use case we might have for the build script. To make the build script powerful enough to be used in any project and for most development tasks, we split the functionality up into functions and introduce commandline arguments to select the action. This is the final script we end up with:

set -e

export IMAGE_NAME=tinco/express-example

export RUN_BUILD="docker run -it --rm -v $PWD:/usr/src/app -w /usr/src/app node:6"

export TEST_COMMAND="./node_modules/mocha/bin/mocha"

function run_image() {

docker run -it --entrypoint /bin/sh $IMAGE_NAME -c $1

}

function build_image() {

$RUN_BUILD npm install

docker build -t $IMAGE_NAME .

}

function test_image() {

run_image $TEST_COMMAND

}

function check_git() {

if [[ `git status --porcelain` ]]; then

echo "Current working directory is dirty, run 'git status' for details."

exit 1

fi

if [[ `git branch --no-color|grep "*"|awk '{ print $2 }'` != $TAG ]]; then

echo "Current branch is not $TAG, checkout and merge that branch before releasing."

exit 1

fi

}

function push() {

git push

docker tag $IMAGE_NAME $IMAGE_NAME:$TAG

docker push $IMAGE_NAME:$TAG

}

case "$1" in

build)

build_image

;;

test)

$RUN_BUILD $TEST_COMMAND

;;

test_image)

test_image

;;

release)

export TAG=$2

if [ -z "$TAG" ]; then

echo "Please supply the tag to be released (i.e. ./docker.sh release production)"

exit 1

fi

build_image

test_image

check_git

push

;;

install)

shift

$RUN_BUILD npm install --save "$@"

;;

*)

echo "Usage: ./docker.sh <command>"

echo ""

echo "For <command> choose any of: build, test, test_image, install, release"

esac

We have put the production build steps under the release argument; and added a few extra options such as testing the application in the build environment, which is slightly faster, as it skips the build step. Adding new operations is as simple as adding a branch to the case statement and updating the usage information. We have also added an install option that conveniently allows us to add npm dependencies.

This last function might come as a surprise, why not just run npm install --save on the host? The answer is that we avoid a dependency on Node.js and NPM, leaving only a dependency on Bash, Docker and git. This means you never have to worry about managing your platform versions, or running other operating systems. The entire application environment from development to production is encapsulated by Docker containers.

Conclusion

In this article we have explained why it can be useful to split up the Docker container build process into two steps. The result is a more efficient build, a smaller runtime image, and it opens up the process to become more convenient. We introduced a small build script that executes the commands, and then proceeded to add convenience features. We walked through the script that builds docker containers, runs application tests, checks for integrity with the source repository, and pushes the application both to the source repository and a docker repository with the appropriate tags. In the final section we showed a finished build script that is open to further extension, and adds some extra use cases.

We hope you enjoyed this article, let us know if you have any feedback on the way we build Docker application containers in the comments below or on Hacker News.

Follow on Github

Follow on Github